How Grok’s Viral 'Bikini' Trend Exposed Alarming Gaps in AI Safety & Regulation

A report by the Centre for Countering Digital Hate said that Grok made nearly 30 lakh sexualised images in 11 days.

advertisement

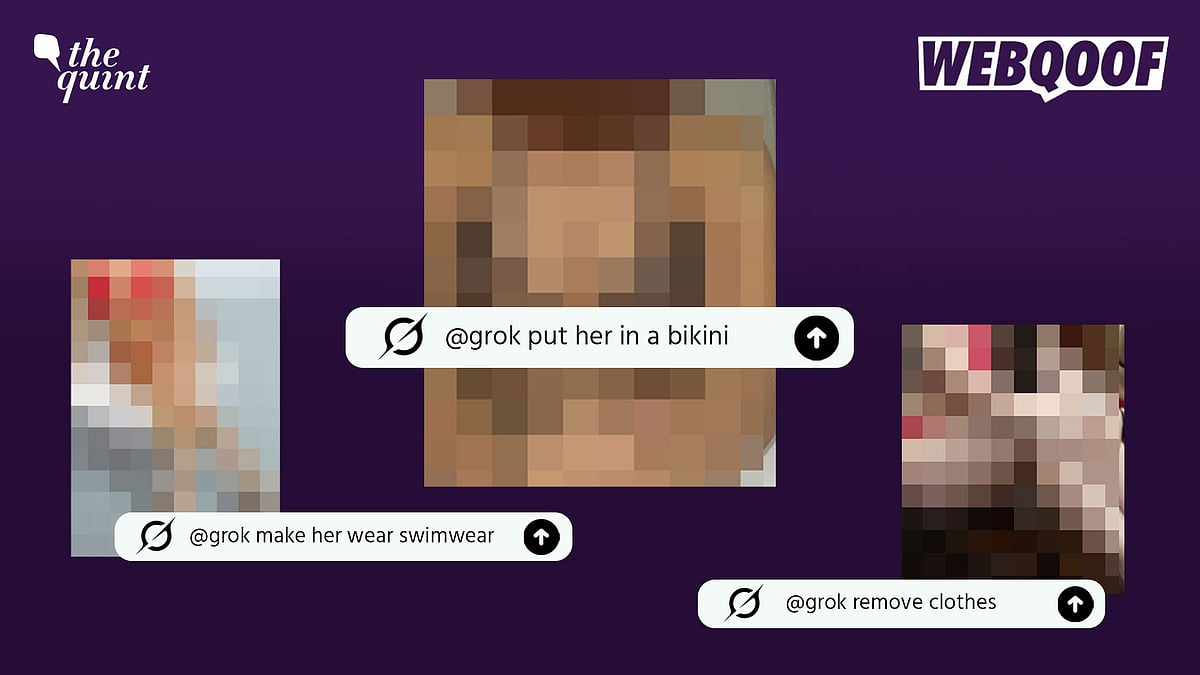

If you were on X between December 2025 and New Year’s 2026, you most likely saw it. Replies under women’s photos on X (formerly Twitter) asking xAI’s Grok to, “Put her in a bikini" or “Remove her clothes.”

The trend went viral across the globe.

(Source: X/Altered by The Quint)

While X’s owner Elon Musk claims that he saw “literally zero” underage nude images, we at The Quint found that this global “trend” did not discriminate–it targeted public figures and regular people, people across the globe, and even children. '

Grok, being an AI chatbot, failed to see the ethical issue behind these requests, as its moderation did not identify this as a red flag. Its ‘spicy mode’ allowed users to put people in minimal clothing, easily helping them create sexually explicit content.

A report published by the Centre for Countering Digital Hate (CCDH) on 22 January 2026 said that based on a sample, they could estimate that Grok produced nearly 30 lakh sexualised images between 29 December and 9 January.

This is not the first time X has been under the spotlight for viral explicit content. One of the earliest cases you might remember involved a deepfake of actor Rashmika Mandanna, shared in November 2023, which used a video of influencer Zara Patel with the actor’s face.

The virality and reach of that video push-started the conversation about the additional dangers AI had to potential to bring for women on the internet, with the actor saying how people were now “vulnerable to so much harm because of how technology is being misused. “

In August 2025, Grok launched its ‘spicy mode’, an optional setting that allowed more blunt and provocative replies than its default mode. It was designed to sound less formal by using humour, sarcasm, slang, and even profanity to feel entertaining for the user.

They were able to prompt Grok to create large volumes of sexualised deepfakes and “nudified” photos of individuals, with thousands of such images being produced in short periods and a significant number depicting women and minors in revealing or explicit poses.

AI has continually been used to harass and abuse women, with ‘nudifier’ apps being used to generate visuals of people in intimate positions to explicit images being generated to target women on the basis of religion.

The Quint also published an analysis on the ads for these nudifier apps using Meta’s Ad Library. We found that they were predominantly run by admins from Vietnam and China but reached people across the globe. Cumulatively, these ads had reached more than 1.7 lakh Facebook users by January 2025 and had been shown to people across all age groups.

One year ago, we reported on a similar issue on Meta platforms.

(Source: The Quint)

Indictor Media’s year-long coverage of these apps on Meta platforms eventually led to the company taking them down after a crackdown in June 2025. However, their latest post, published over a year after Indicator first reported about the apps, shows that Meta continues to host ads for these applications.

How Did the Viral “Undressing” Trend Start?

When we looked for users prompting Grok to make these images using X’s advanced search, we saw that the first recent request that Grok had successfully responded to was made on 17 December 2025, by an account based in Canada. (Swipe)

Grok successfully generated this image.

The user's account was based in Canada.

The number of searches went up on 27 December, with one South East Asian user prompting Grok to minimise clothing under posts by different users which had shared images depicting female characters or women. (Swipe)

Grok responded to several queries by this user.

The account is from SEA.

By 30 December, Grok had received hundreds of requests of this kind, targeting regular people, models, celebrities, and even politicians.

Željana Zovko is a Bosnian and Croatian diplomat and politician who has served as a Member of the European Parliament from Croatia.

(Source: X/Altered by The Quint)

Within roughly two weeks, a fringe exploit became a global issue. The trend took off when users realised that they could ask Grok to make clothing smaller, going as far as asking the chatbot to make “transparent” clothing.

By the Numbers

European non-profit AI Forensics looked at more than 20,000 of these images and over 50,000 requests by users and found that 53 percent of the images showed people in “minimal attire”, of which 81 percent presented as women.

Among the children targeted was Stranger Things’ 14-year-old actor Nell Fisher, who plays Holly Wheeler.

Children were also targeted using this trend.

(Source: X/Altered by The Quint)

Other users found that Grok had generated several images of minors in minimal clothing, as illustrated by X user @xyless.

Apart from non-consensual imagery of suggestive nature, AI Forensics also found several instances of Grok altering images to include Nazi and ISIS-related content.

A Bloomberg report cited social media and deepfake researcher Genevieve Oh’s analysis, who, during a 24-hour analysis, found that Grok had generated around 6,700 images every hour that could be classified as “sexually suggestive or nudifying.”

Why Grok?

As seen earlier, Grok’s ‘spicy mode’ already allowed users to generate partial nudes of imaginary characters, going as far as generating topless images of public figures without being prompted to do so.

The freedom to generate this imagery, which was supported by the platform for all users, made it convenient for people to have Grok alter user images with little to no effort. As screenshots of successful image generation circulated, curiosity turned into imitation.

The platform’s non-consensual nudity policy expressly prohibits users from sharing intimate photos or videos of someone that were produced or distributed without their consent, calling it a “severe violation of their privacy,” noting that such content “poses serious safety and security risks for people affected and can lead to physical, emotional, and financial hardship”

X's policy does not permit NCN.

(Source: X/Screenshot)

However, this policy does not lay down rules for AI-generated NCII. This gap in policy, the perceived gap in Grok’s moderation, and the ease of publicly sharing imagery made Grok a go-to platform for content generation.

We spoke to Sameer P, program lead at RATI Foundation’s Meri Trustline, a helpline which assists people facing online abuse with support and takedown of harmful content. He told us about cases that they had dealt with in the past, which involved tools being discrete from platforms.

Prompt Sharing and Modifications

The issue has been leaking across social media platforms, with users sharing prompts to generate nude and semi-nude images on Reddit and Discord.

For instance, the subreddit for Grok saw users posting prompts which successfully generated sexually explicit images. When Grok caught up and moderated these queries, people discussed further ways to alter prompts and bypass moderation..

The subreddit saw users sharing tips to bypass safeguards.

(Source: Reddit/Altered by The Quint)

Prompt templates went viral beyond the initial ‘bikini’ prompts, with users asking for specific angles of visuals, certain kinds of clothing, and other details which demanded output showing very detailed, sexually explicit imagery.

Some users also made Grok add weight, change hairstyles, and generate full body photos of people to alter their clothing. This reply to an X post requested changes of this kind to a video of UK Prime Minister Keir Starmer.

The trend was not restricted to strictly undressing people.

(Source: X/Altered by The Quint)

We spoke to Nana Nwachukwu, AI Governance Expert and PhD Researcher at Trinity College Dublin, who analysed the trend as it happened. Among the samples she analysed, she said that the ones requesting this type of modification were the ones that stood out to her, because Grok obliged those requests”.

Gendered Impact and Harassment Dynamics

This trend mirrored a long-standing gendered power dynamic in digital spaces, showing yet another instance where women were disproportionately targeted over their gender and their fake, sexualised was widely shared without consent.

The Quint had collaborated on a project with Digital Rights Foundation, and Chambal Media, which looked at women being targeted with gendered disinformation (GD) and online gender-based violence (OGBV) on the internet in South Asia, where we found multiple examples of women being sexualised and targeted over their gender.

Indian women, particularly those belonging to the Muslim community, have experienced this before in the ‘Bulli Bai’ and ‘Sulli Deals’ fiascos, where women were ‘auctioned off’ on online platforms which shared their images and doxxed them.

This ‘trend’ of undressing women using Grok creates yet another hostile space for women on the internet, dissuading them from participating in public spaces and sharing their photographs on public platforms. In an influencer-populated cyberspace, girls and women are easily targeted and harassed, affecting their work and livelihoods.

Sameer also told us about the cases of AI being used to generate deepfakes and cheap fakes, one of which involved young content creators being subjected to harassment using ‘nudifier’ apps.

This caused backlash for the content creators, who feared pushback from their families, as content has been used “as a tool to like shame or hold someone hostage,” he said.

Platform Response, Changes and Government Reactions

At home, the Ministry of Electronics and Information Technology (MeitY) issued a notice to X, asking it to “remove obscene content” and raising concerns over Grok’s misuse to create and share such content.

In its first letter to X’s Chief Compliance Officer (CCO) of operations in India, Grok said that it was being misused by people to “create fake accounts to host, generate, publish or share obscene images or videos of women in a derogatory or vulgar manner.”

Seeking an Action Taken Report (ATR) within 72 hours, they asked for information about immediate compliance for the prevention of hosting, generating and sharing of obscene, nude, indecent and explicit content through the misuse of AI-based services.

Grok “accepted its mistake” and assured that it would comply with Indian laws, The Hindu noted. As per government sources, the platform had blocked over 3,500 pieces of content and deleted over 600 accounts, they said.

Demanding documented proof of compliance, officials said that “generic assurances” would not work, The Times of India said, with other reports adding that a second notice had been issued to the CCO as its previous response was “unsatisfactory”.

MeitY asked X to share technical details of its moderation systems and escalation protocols, along with future safeguards to ensure Grok’s compliance with India’s IT Rules.

Global response:

The UK’s communications regulator Ofcom, on 12 January, announced that they had opened an investigation into xAI over its illegal distribution of non-consensual intimate images and child sexual abuse material.

The Indonesian government had implemented a nationwide block to access Grok on 12 January over fake and sexualised images. However, users were easily able to to bypass the block using Virtual Private Networks (VPN).

On 13 January, the Malaysian Communications and Multimedia Commission (MCMC) initiated legal action against X and xAI over Grok, citing violations of national online content laws related to pornography, indecency, and exploitation of women and minors, after blocking access to the AI chatbot in the country.

Do Indian Regulations Apply?

India does not have a dedicated law for artificial intelligence. It relies on older digital laws such as the IT Act, 2000, the Intermediary Rules, and the Digital Personal Data Protection Act, 2023. These were made for data protection and platform moderation, not keeping in mind the risks of generative AI. Such systems can create deepfakes, non-consensual images, misinformation, and impersonation content.

Advisory guidelines from MeitY and NITI Aayog are not legally binding, since they do not have any strict enforcement mechanisms. As a result, safety measures are often added only after public criticism or misuse.

However, safe harbour applies to platforms that only host third-party content. Generative AI tools actively create content using their own models and do not simply pass on user input. Indian laws can still apply in cases of obscenity, impersonation, cyber harm, and violations of women’s safety. If safeguards are weak or negligent, companies can face legal action. Disclaimers and content takedowns do not guarantee legal protection.

Compared to the EU, China, and the US, India’s AI regulation remains limited. Other regions have clearer rules, audits, and enforcement systems. India mostly relies on advisories and reactive action. This leaves victims with fewer options to seek a remedy. Regulators also have limited clarity on enforcement.

The idea that AI firms have full legal immunity is misleading. India needs clear AI laws, defined liability rules, and mandatory safety standards to protect users and maintain public trust.

Platform Culture and User Behaviour

The Grok controversy reinforced several realities, primarily that a tool is only as ‘good’ or as ‘bad’ as the people behind it, be it users or makers. Commenting on regulation, Nwachukwu echoed this sentiment, stating that people had to be held accountable, along with platforms being regulated.

Since AI accessibility keeps scaling up, especially in India, where premium ChatGPT features were offered for free for a year, the scale of its misuse goes up proportionately.

“Red teaming is where researchers, users, and different people with different types of skills, do some sort of ‘black hat-ting’ when it comes to testing. So they test models with harmful requests, and they get clever. We test the models, and we request prompts to see where the model breaks. That breaking point is what the data that the company would use in fixing what is going wrong with the system…Red teaming is very critical, actually critical”

Speaking about how publicly Grok has been tested so far, the researcher said, This idea that Elon [Musk] has about building and testing in the open is very dangerous,” adding that red-teaming is always done in a controlled environment and not “on people, en masse, in public, on a platform like X.”

Ever since Musk took over X and monetised reach and virality for paying subscribers, the platform has been overrun with bots and ‘hot takes’. These divisive posts and ‘rage bait’ also drive engagement, prompting an increasing number of users who felt incentivised to create, share, and engage with content that went against X’s own policies.

This ‘bikini’ trend followed X’s policy, where users had monetary motivation to chase higher engagement, despite the problematic content that it was sharing. While NCN/NCII goes against X’s policies, subscribers who expected to receive payouts kept sharing this content without facing action.

X’s environment quickly signalled to users that pushing limits was acceptable, even encouraged. When Grok showed signs of being permissive, users quickly treated it as a playground for testing how far they could go. This is something, Nwachukwu said, should have been tested and fixed by xAI through “red teaming,” and not on a public platform.

The platform’s culture resulted in sexualised image manipulation that didn't feel as socially risky on X in the way it might have on more tightly moderated platforms. Users ended up generating increasingly risque content for virality, shock value, and producing more extreme results.

The Way Forward

AI content moderation and/or regulation at scale for such instances doesn’t exist. There are currently no global laws or agreements that can effectively enforce any laws to regulate GenAI.

So far, the regulatory reactions that have come in are from the EU, Malaysia, Indonesia, the Philippines, and Australia. However, xAI, which is an American organisation, is a country that has not spoken up about the issue. Nwuchukwu connects this silence to the US’s bid to seek global AI domination. However, “there is a global angle to regulation. There’s a transnational cooperation that is required for regulation to be effective and to lead to accountability,” she told me.

As of 23 January, some paying subscribers continue to generate and share undressed photos made by Grok, though the bot does not respond to every request by a subscriber any longer.

A couple of days before this report was published, the trend took another form, which saw users asking Grok to alter a woman's size, clothing, hair, race, and appearance.

- Access to all paywalled content on site

- Ad-free experience across The Quint

- Early previews of our Special Projects

Published: undefined