If you spend even a few minutes scrolling through Instagram or YouTube, chances are you've stumbled upon clips showing reporters vanishing into potholes, bystanders reacting dramatically to accidents, or surreal interviews unfolding in bizarre settings. They’re comic, uncanny, and increasingly everywhere.

Welcome to the bizarre world of AI slop — a rising wave of low-quality, Artificial Intelligence (AI)-generated videos flooding our feeds.

These clips may seem like harmless situational comedy at first glance, but under the surface, they're raising serious concerns about misinformation, virality, and the collapsing boundary between reality and fabrication.

Look at this video below — It shows a woman asking a question to a school teacher while both of them are stuck in a waterlogged road. The latter replies that he can't take a leave, but his students are supposed to take a Math test.

Speaking to The Quint, Ami Kumar, co-founder of Contrails AI, said that he has indeed seen an increase in people reaching out to him to verify AI-generated content.

"When we started our work at Contrails in 2023, most people had barely heard of deepfakes. But since then, we've seen a sharp rise in requests for deepfake detection—from fact-checkers, law enforcement agencies, and online platforms. The growing accessibility of generative AI has triggered global concern, with countries like the US introducing the Take It Down Act and the UK’s Ofcom pushing forward efforts to criminalise malicious deepfake use..."Ami Kumar, co-founder, Contrails

The Quint's WebQoof, through this story, will look at how such slop is filling the space on the internet and can also lead to spreading m/disinformation.

Content Content Everywhere, But Should You Really Share?

When we went through the Instagram handle named '@bhairalbillu' that had shared the video (seen above), we found that it was filled with such AI-generated clips.

It featured videos of purported journalists falling into waterlogged roads or funny interviews — a theme that remained constant across various Instagram handles.

The handle's bio carried a link to a website, where the user was also offering a course to teach people how to create "realistic AI videos" using Google's AI tool called Veo 3.

Another user called 'reelsbazaar34' shares similar content on their account. The screenshot below shows a common identifier — a reporter taking interviews or vehicles meeting with drastic accidents.

If you look at the clips, some of them have views in lakh. This clearly highlights the kind of reach and engagement slop content is getting on social media platforms.

In a clip that was shared by an Instagram user called '@bandar_ki_dunia', who regularly shares such AI-generated content, we found several comments that painted a concerning picture.

While a user pointed that AI is dangerous, a different person mentioned how they struggle to differentiate between AI and reality.

While some people may argue that the content type seems like a comic relief, the advent of such AI-generated visuals can soon become a major problem like we have seen in the past.

Kumar agrees that the AI slop can increase the spread of m/disinformation on social media platforms. He said, "As algorithms prioritise engagement and volume, the business of mis- and disinformation thrives."

"What makes it worse now is the rapid advancement of generative AI—creating hyper-realistic deepfake videos and audios is no longer technically complex or expensive. This ease of production, coupled with platforms that reward virality over veracity, creates a dangerous ecosystem where false narratives can spread faster than ever before."Ami Kumar, co-founder, Contrails

AI Meets Advertising: A New Model?

When the phenomenon was gaining traction on the internet, advertisers, too, tried their hand at promoting their brands and products. Don't believe us? Look at the video below.

After the women in the clip fall from the rickshaw, one of them shouts, "You broke my back. Now, how will I visit 'design by pakiza'?"

If you think that was a one-off incident, you might want to reconsider it. Sample this — this video shows two women standing on a boat in a waterlogged road and being interviewed by a reporter.

The latter asks the women where they are going. The women mention that they are visiting a store as they have good offers.

It should be noted that this AI slop has affected other social media platforms as well. As per recent reports, YouTube announced tightening of its monetisation rules under the YouTube Partner Program (YPP) in a bid to deal with the problem of AI-generated content.

YouTube announced that its "repetitious content" policy is now being renamed to "inauthentic content", which will now deem mass-produced and repetitive content as ineligible for monetisation.

The Slop & the Things That Never Happened

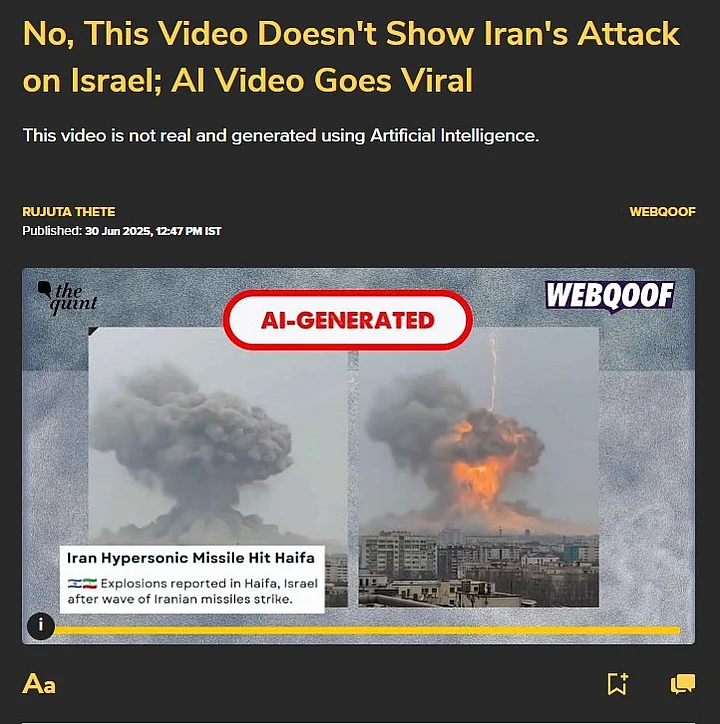

Amid the escalations between Israel and Iran, a video shared in June had gone viral, claiming that it showed people from Israel apologising to Iran and asking the latter to stop the war.

This post shared by an X (formerly Twitter) premium user named '@SirRavishFC' had gained over a lakh views on the platform.

Team WebQoof debunked the clip and found that it was generated using the help of AI tools. We found a watermark that indicated that the viral clip was generated using Google's Veo 3.

We found another video, where a purported Israeli soldier was heard urging Iran to stop their attacks and mentioning that half of Israel has been destroyed.

As one can imagine, this video, too, was generated using AI and did not show any real visuals.

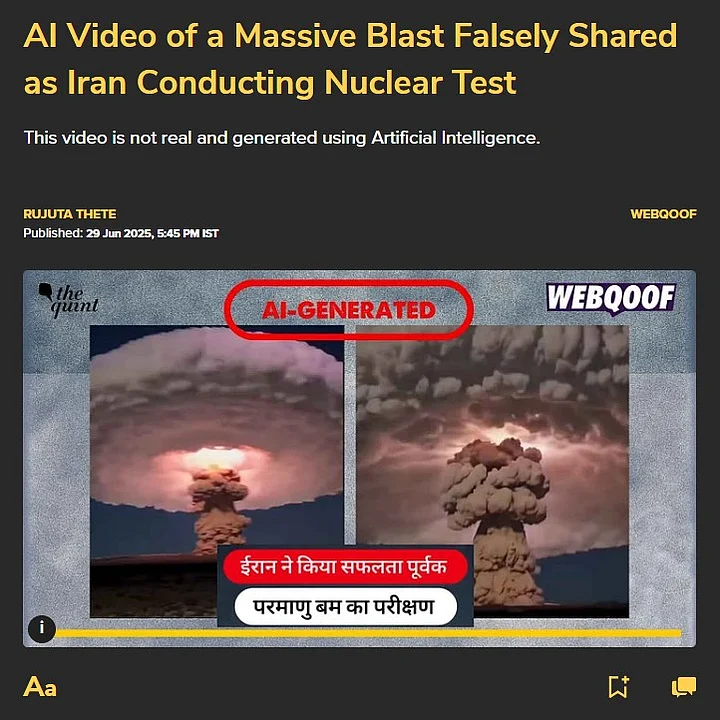

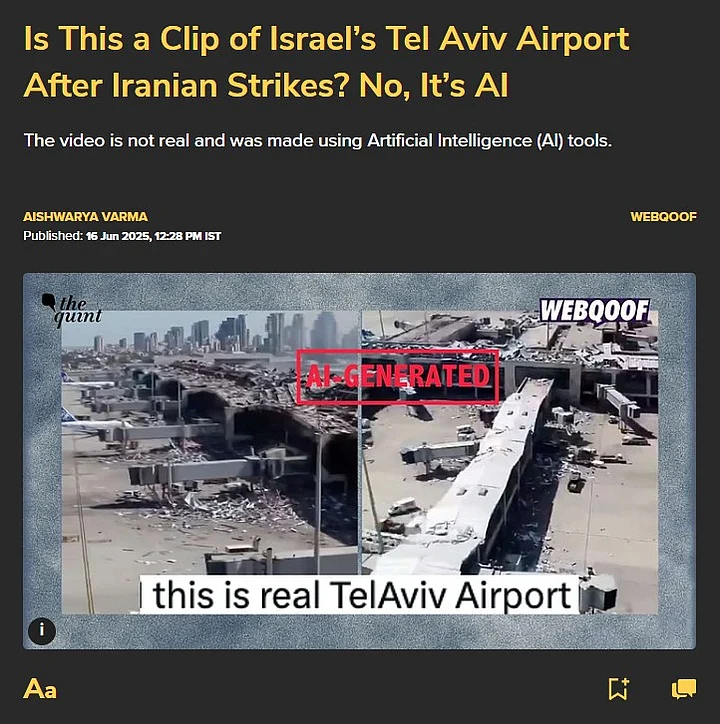

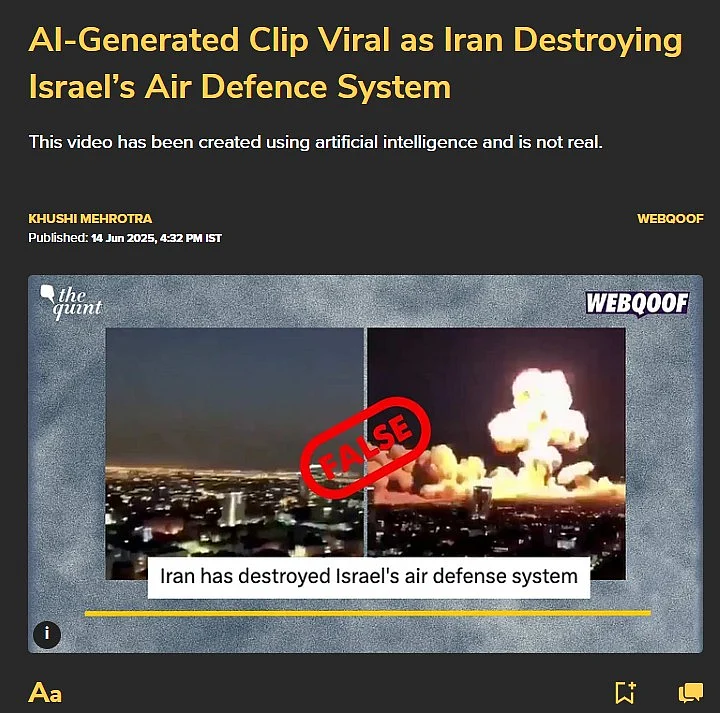

In the past month, Team WebQoof has debunked several AI-generated videos that were falsely shared as real visuals from either Israel or Iran, amid the tensions between the two countries.

(Swipe right to view all screenshots.)

News outlet Politico highlighted how AI slop was spreading during the Israel-Iran war, where users were sharing AI-generated content as evidences of real-life damages in order to support their narratives.

It also talked about how leaders from different countries were sharing AI visuals, which could potentially lead to erosion of public's trust and also decrease their reliance on media.

It should be noted that social media users using unrelated visuals to further their propaganda is nothing new.

For example, pro-Russian accounts had previously used edited and manipulated visuals to target countries that had put sanctions on Russia. Our fact-checking team had published a deep dive that can be read here.

Liar’s Dividend: How AI Slop Fuels Denial and Doubt

The rise of AI slop also paves the way for a more concerning phenomenon: the liar’s dividend.

As AI-generated content becomes increasingly sophisticated, it provides bad actors with a convenient excuse to dismiss authentic evidence as fake, which has already been happening to some extent. Real footage or audio has been brushed off as an AI fabrication, especially when it is politically or personally damaging.

A study from Georgia Tech found that even the suggestion of deepfakes can reduce trust in accurate media, affecting how people evaluate both news and authority figures.

When we asked Kumar how the slop can affect the information integrity on social media, he said, "We’re witnessing a real decay in our information systems. The old adage — 'seeing is believing' — no longer holds true. We’re entering a new era where people will have to carefully curate and question their information sources."

"Media literacy is no longer optional; it’s essential. From my work in schools and colleges across India, I can confidently say that most deepfakes are never debunked. What’s even more alarming is the rise of medical and economic deepfakes—scams involving fake medicines and Ponzi schemes—that are targeting unsuspecting individuals. It’s a serious threat to both public trust and personal safety."Ami Kumar, co-founder, Contrails

While the level of impact the slop would have on the internet remains to be seen in the future, the damage has started already with common users being overwhelmed with information from unverified sources.

So, next time when you come across such reels, remember to stop and verify before sharing. Look at official sources or simply reach out to fact-checking organisations like The Quint who will help you in verifying/debunking such clips.

(With inputs from Tanishq Khare.)

(Not convinced of a post or information you came across online and want it verified? Send us the details on WhatsApp at 9540511818 , or e-mail it to us at webqoof@thequint.com and we'll fact-check it for you. You can also read all our fact-checked stories here.)